Artificial intelligence has been experiencing a huge boom lately. Everyone is so excited about this technology that they don't even realise the risks that could come in the future. AI experts are currently sounding the alarm, warning that companies should start addressing the risks before it's too late.

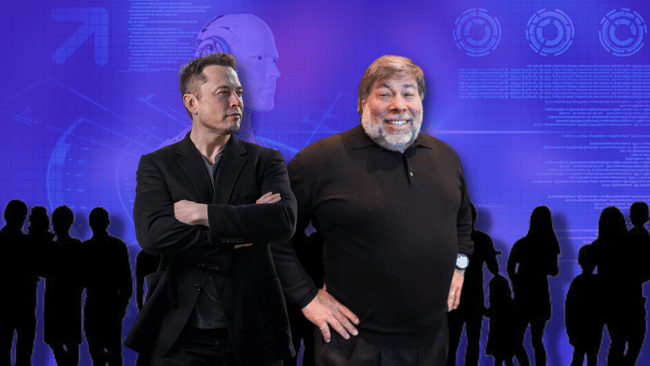

In a recent open letter entitled "Pause Giant AI Experiments: An Open Letter", artificial intelligence (AI) experts call on companies working in the field to temporarily halt development of generative AI systems. The letter, signed by more than 1,000 people including Elon Musk, Steve Wozniak, Evan Sharp, Craig Peters and Yoshua Bengio, highlights the uncertainty about the potential future impact of the results of these developments.

In the letter, the experts suggest a six-month pause to develop regulatory policies and procedures to safely advance AI technology. The pause should only apply to training AI systems more powerful than GPT-4, the generative AI model developed by OpenAI. If the experts' call is not heeded, the letter suggests that governments impose a moratorium.

Companies investing in the development of generative AI and related technologies include giants such as Microsoft $MSFT, Alphabet $GOOGL, Alibaba $BABA and Baidu $BIDU. The experts' letter calls for safety protocols and assurance of positive impact and risk manageability. The outcome of the letter could result in companies either stopping and formulating new policies or at least thinking about the potential impacts and taking the necessary action.

GPT-4 as a trigger for potential problems

GPT-4, which was recently introduced, has impressed users with its capabilities in conversation, songwriting and summarizing long documents. The reaction to this model has led competitors such as Alphabet Inc to accelerate the development of similar large language models. However, AI experts are calling for a slowdown in development until we better understand the implications and potential damage to society.

The Future of Life Institute, a nonprofit organization funded primarily by the Musk Foundation, the London-based Founders Pledge group and the Silicon Valley Community Foundation, which issued an open letter, thinks powerful AI systems should only be developed when we are confident that their effects will be positive and their risks manageable. The organisation also calls for a regulatory body to ensure that AI development serves the public interest.

Elon Musk, co-founder of OpenAI and one of the experts signed on to the letter, is known for his warnings about the potential risks associated with AI development. He says it is essential to establish shared security protocols developed by independent experts, and urges developers to work with policymakers on governance.

OpenAI's executive director, Sam Altman, and the CEOs of Alphabet and Microsoft, Sundar Pichai and Satya Nadella, were not among the signatories to the open letter. Nonetheless, the letter represents an important step towards opening a discussion on the future of AI and the potential risks it poses.

Possible implications of the letter

There are several possible implications that may arise from the letter. One is that companies and research institutions involved in AI development could temporarily halt their activities and rethink their practices. This scenario would allow experts and regulators to take a look at developments to date and take the necessary measures to ensure safety and sustainability in the AI field.

Alternatively, the letter could lead to a greater awareness of the potential risks associated with AI development and the need to establish safety protocols and regulatory procedures. This would allow companies to focus more on creating safer and more ethical AI systems that have a positive impact on society.

Finally, the letter could inspire governments and international organisations to consider introducing regulatory measures and moratoriums for AI development. This would ensure that AI development is conducted in accordance with the best interests of society and managed to minimise potential negative impacts.

Regardless of the outcome of the open letter, it is clear that the debate on the safety and ethics of AI development is increasingly urgent. As AI systems such as GPT-4 become increasingly pervasive in everyday life, there is a need to focus on how these technologies can be used for the good of society without compromising the privacy, security or interests of individuals.

One example that shows how AI systems can be used for the public good is the use of GPT-4 to solve environmental, health or economic problems. On the other hand, it is important to consider possible risks, such as misuse of the technology to spread misinformation, manipulate public opinion or increase inequality in access to technology.

The open letter "Pause Giant AI Experiments" is a call to reflect on the way AI development will take. Whether a consensus can be reached between experts, companies and governments remains a question. However, it is important that scientists, industry leaders and policymakers focus on working together to find ways to secure and regulate AI development that is in the best interests of humanity.

WARNING: I am not a financial advisor, and this material does not serve as a financial or investment recommendation. The content of this material is purely informational.